Handicapping the Algorithm

Now that we’ve developed the technology to manufacture public opinion, we need to find the tools to stop it.

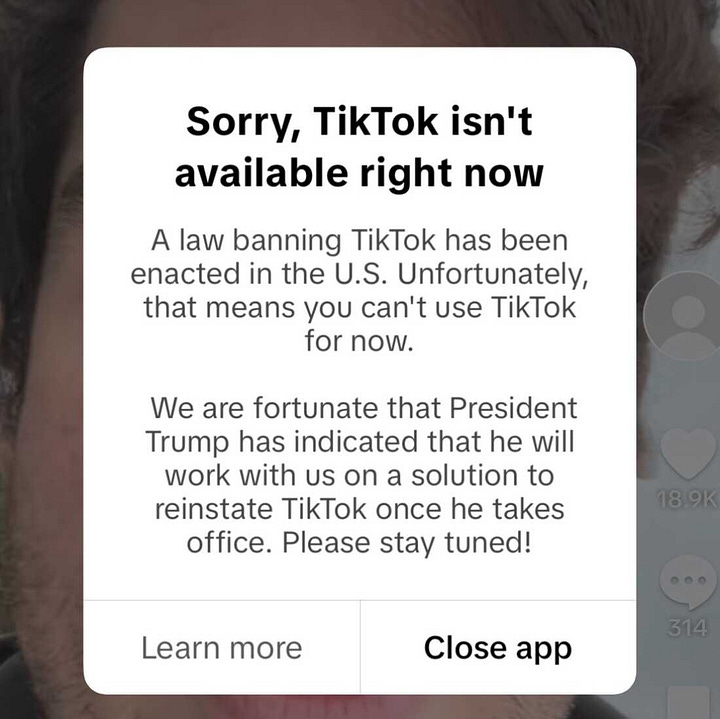

This week, a pair of messages were delivered directly into the palms of 170 million Americans. Though the messages were straightforward, they should be setting off alarm bells.

Clearly, Trump realized that having control over the on/off switch for the entertainment consumed by 170 million of his citizens could be a powerful way of cementing his cult of personality. Like a lollipop snatched from your hand, the US government took away your TikTok—but fear not, President Trump is working hard to get it back to you! And he delivered!

TikTok is a platform through which we can access the Algorithm. The Algorithm curates a stream of digital content tailored to hold the attention of each individual user. Companies pay to stick toothpaste ads in that stream, so the longer you scroll the more money TikTok makes.

However, there’s another way social media companies make money using the Algorithm. Just as toothpaste companies pay social media companies to engineer public opinion to make people buy their brand of toothpaste, governments have taken note of the immense power the Algorithm can have over public opinion in order to generate approval for their politics.

The recent Romanian election is a dramatic case in point. Hellbent on destabilizing the countries in its former empire, the Russian Government picked out Călin Georgescu, a pro-Russian candidate in the upcoming Romanian election as their desired next president of Romania. The only problem was, Georgescu was polling around 5%. Unexpectedly, come election day, Georgescu catapulted to first place. How? The Russians paid TikTok to engineer public opinion.

TikTok knows what posts you like, which comments you read, how long you watch a reel, and the flavour of memes you find funny. From this, TikTok can build a political profile of each individual in a country, and sell politicians the ability to present themselves in the way you’re most receptive to. Got a problem with immigration? Here’s a clip of Georgescu pounding his fist with some podcast bros. Volunteer at an animal shelter? Here’s a clip of Georgescu feeding puppies at a kennel.

Georgescu’s rise was highly unexpected and Russian meddling wasn’t exactly inconspicuous. This time, Romania’s high court had the wisdom to overturn the election. But where struggles for power hinge on fine margins, and foreign powers are more subtle, the Algorithm can, and likely has, determined the outcomes of elections.

Perhaps even more disturbingly, we spend so much time sucked into the addictive stream of content fed to us by the Algorithm, it’s becoming difficult to parse what opinions are truly our own, and which ones we hold because someone paid to implant them in us.

As the cherry on top, we all know the Algorithm is bad for us. Few people would lament cutting the time they spend on social media in half. Not only are we increasingly cognizant of the political destabilization the Algorithm can produce, we know that certain forms of social media use are addictions. Like gambling, the Algorithm exploits reward circuits in our brains to grab and hold our attention, all while someone turns a profit off it.

There are a few services that try to wean us off social media, but they aren’t very effective. Most are apps you can download that place a time delay on access to social media, or a daily time limit on its use. Our desire to doomscroll remains unchanged, and sometimes the ability to doomscroll is tangled cleverly together with social media functions we use in a productive manner.

The real solution is to handicap the Algorithm, not put up barriers to accessing it. Handicapping the Algorithm would mean making it less addictive, less of a part of our lives, and less of a tool for political control. We could intentionally make the Algorithm less potent—we just need the political will to make it happen.

Imagine an app that makes your feed just a little less interesting every day. Reels don’t autoplay on your explore page, and certain forms of particularly addictive ‘brainrot-y’ content slowly stop showing up. Gradually, your feed just becomes less interesting. Since this happens imperceptibly over the course of a couple months, without even noticing, you’re down to an hour of social media a day.

There’s nothing in this idea that’s technically impossible—it just happens not to be legal. To artificially handicap the Algorithm, software developers would have to have the right to make changes to the Algorithm with the explicit goal of making it less addictive. TikTok and Meta make their billions by harvesting and monetizing your attention. They will fork as much money into the pockets of politicians and intellectual property lawyers as it takes to ensure the Algorithm remains as potent as possible—even if it degrades our democratic institutions, and drains our free time.

Fortunately, there’s a slowly growing precedent for the Algorithm being restricted, although not handicapped. The Australian government recently banned social media for anyone under the age of 16, citing concerns for its young citizens’ wellbeing. Perhaps governments should consider nipping the social media problem in the bud—they should legislate that our feeds gradually become worse.

The problem is, such a move takes moral fibre. There’s no money to be made making an attention-harvesting technology worse, and there’s no way demagogues could resist the opportunity to use addictive social media platforms to consolidate their personality cults.

It feels strange that the way towards a brighter future might mean making existing technology worse. However, when technical achievements cause deep social harm when put into practice, the desire for a better life might point us in the direction of a world with poorer, not better technology.

truly obsessed!! so glad i subscribed to your substack!!

love this piece so much!! i would love your thoughts on the differences between “handicapping” and “restricting” the Algorithm? how would this look in differences in legislation?